You don't need a specific driver for your hardware, for example, for implementing a local area network using Ethernet hardware or for implementing TCP/IP networking that requires IP encapsulation.

In these cases, the underlying io-pkt* and TCP/IP layer is sufficient to interface with the Qnet layer for transmitting and receiving packets. You use standard QNX Neutrino drivers to implement Qnet over a local area network or to encapsulate Qnet messages in IP (TCP/IP) to allow Qnet to be routed to remote networks.

The driver essentially performs three functions: transmitting a packet, receiving a packet, and resolving the remote node's interface (address).

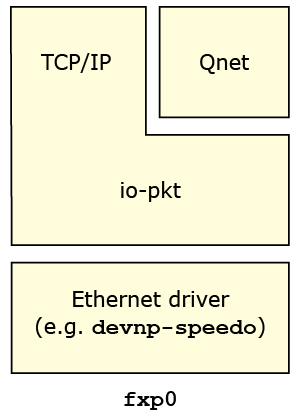

First, let's define what exactly a driver is, from

Qnet's perspective. When Qnet is run with its default binding

of raw Ethernet (e.g., bind=en0), you'll find the following

arrangement of layers that exists in the node:

In the above case, io-pkt* is actually the driver that transmits and receives packets, and thus acts as a hardware-abstraction layer. Qnet doesn't care about details of the Ethernet hardware or driver.

So, if you simply want new Ethernet hardware supported, you don't need to write a Qnet-specific driver. What you need is just a normal Ethernet driver that knows how to interface to io-pkt*.

There is a bit of code at the very bottom of Qnet that's specific to io-pkt* and has knowledge of exactly how io-pkt* likes to transmit and receive packets. This is the L4 driver API abstraction layer.

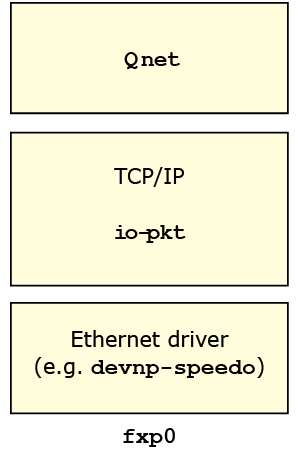

Let's take a look at the arrangement of layers that

exist in the node when Qnet is run with the optional binding of

IP encapsulation (e.g., bind=ip):

As far as Qnet is concerned, the TCP/IP stack is now its driver. This stack is responsible for transmitting and receiving packets.