The SMMUMAN supports spatial isolation of DMA devices on systems with IOMMU/SMMUs.

Isolation

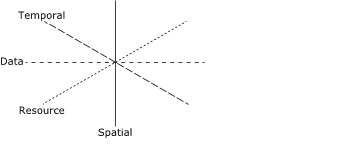

The figure below presents the four isolation axes that can be implemented in a software system. Spatial isolation is fundamental to all other forms of isolation.

Figure 1. The isolation axes: spatial, resource, data, temporal.

Figure 1. The isolation axes: spatial, resource, data, temporal.In a QNX system, the OS kernel (procnto) uses MMU page tables to control attempts to access memory. In a virtualized system with the QNX Neutrino OS plus its virtualization extension (a libmod_qvm variant) running as the host, a second layer of page tables (or intermediate page tables) is used to manage guest access to memory.

MMUs can’t be used to manage Direct Memory Access (DMA) device attempts to access memory, however. The following section describes a different hardware mechanism, called an IOMMU/SMMU, which manages DMA device access to memory.

DMA devices and IOMMU/SMMUs

A non-CPU initiated read or write is a read or write request from a DMA device (e.g., GPU, network card, sound card).

The CPU doesn't control memory access by a DMA device. Instead, a DMA device takes control of the memory bus to gain direct access to system memory. Since no CPU is implicated in the memory access, an OS can’t manage a DMA device’s access to system memory without support from hardware IOMMU/SMMUs.

Most importantly, the protections the OS can provide against incorrect (and possibly malicious) access to memory by requests that go through the CPU do not apply to access requests from DMA devices. The OS cannot ensure that a DMA device is prevented from accessing memory it is not authorized to access.

A System Memory Management Unit (IOMMU/SMMU) is a hardware component that provides translation and access control for non-CPU initiated reads and writes, similar to the translation and access control page tables provide for CPU-initiated reads and writes.

Hardware limitations

Note that on some boards the IOMMU/SMMU hardware doesn't provide the smmuman service the information it requires to map individual devices and report their attempted transgressions.

For example, on some ARM boards the IOMMU doesn't provide the smmuman service the information it requires to identify individual PCI devices. Similarly, on x86 boards the VT-d hardware can't identify or control individual MMIO devices that do DMA. Additionally, some boards might not have enough session identifiers (SIDs) to be able to assign a unique SID to every hardware device, so multiple hardware devices may have to share an SID.

Pass-through DMA devices in virtualized systems

In a virtualized system, pass-through devices are devices that are “owned” by a guest running in a VM. A driver in the guest controls the device hardware directly.

For a DMA device to be usable as a pass-through device in a virtualized system, an IOMMU/SMMU is required for the following reasons:

A DMA device that is passed through to a guest won’t work if its memory access is restricted to the host-physical memory regions assigned for the guest’s memory. It requires its own region in host-physical memory, and this region must be mapped to guest-physical memory.

An IOMMU/SMMU is required to map guest-physical addresses visible to the DMA device to host-physical addresses. Since the DMA device is owned by the guest, it is configured to output guest-physical addresses to the bus, and the IOMMU/SMMU is needed to convert these addresses to host-physical addresses before they are passed on to the memory controller.

The hypervisor host layer has no knowledge of a device that is passed through to a guest, and a DMA device’s memory access doesn’t go through a CPU, which could trap transgressions. If the guest OS fails to notice that a DMA device is misbehaving, in the absence of an IOMMU/SMMU, no further checks are available.

To protect against misbehaving pass-through DMA devices, an IOMMU/SMMU must be programmed with the memory regions that each DMA device (regardless of ownership) is permitted to access.