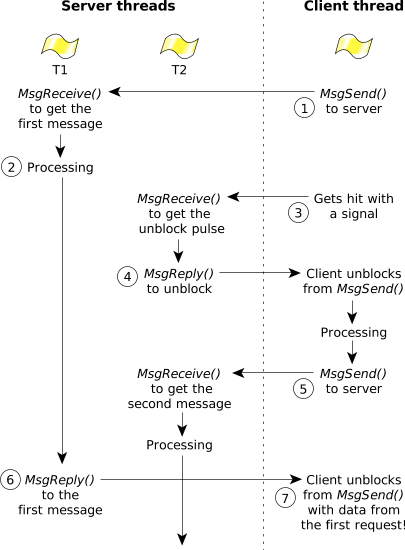

Even if you use the _NTO_CHF_UNBLOCK flag as described above, there's still one more synchronization problem to deal with. Suppose that you have multiple server threads blocked on the MsgReceive() function, waiting for messages or pulses, and the client sends you a message. One thread goes off and begins the client's work. While that's happening, the client wishes to unblock, so the kernel generates the unblock pulse. Another thread in the server receives this pulse. At this point, there's a race condition—the first thread could be just about ready to reply to the client. If the second thread (that got the pulse) does the reply, then there's a chance that the client would unblock and send another message to the server, with the server's first thread now getting a chance to run and replying to the client's second request with the first request's data:

Figure 1. Confusion in a multithreaded server.

Figure 1. Confusion in a multithreaded server.Or, if the thread that got the pulse is just about to reply to the client, and the first thread does the reply, then you have the same situation—the first thread unblocks the client, who sends another request, and the second thread (that got the pulse) now unblocks the client's second request.

The situation is that you have two parallel flows of execution (one caused by the message, and one caused by the pulse). Ordinarily, we'd immediately recognize this as a situation that requires a mutex. Unfortunately, this causes a problem—the mutex would have to be acquired immediately after the MsgReceive() and released before the MsgReply(). While this will indeed work, it defeats the whole purpose of the unblock pulse! (The server would either get the message and ignore the unblock pulse until after it had replied to the client, or the server would get the unblock pulse and cancel the client's second operation.)

A solution that looks promising (but is ultimately doomed to failure) would be to have a fine-grained mutex. What I mean by that is a mutex that gets locked and unlocked only around small portions of the control flow (the way that you're supposed to use a mutex, instead of blocking the entire processing section, as proposed above). You'd set up a “Have we replied yet?” flag in the server, and this flag would be cleared when you received a message and set when you replied to a message. Just before you replied to the message, you'd check the flag. If the flag indicates that the message has already been replied to, you'd skip the reply. The mutex would be locked and unlocked around the checking and setting of the flag.

Unfortunately, this won't work because we're not always dealing with two parallel flows of execution—the client won't always get hit with a signal during processing (causing an unblock pulse). Here's the scenario where it breaks:

- The client sends a message to the server; the client is now blocked, the server is now running.

- Since the server received a request from the client, the flag is reset to 0, indicating that we still need to reply to the client.

- The server replies normally to the client (because the flag was set to 0) and sets the flag to 1 indicating that, if an unblock-pulse arrives, it should be ignored.

- (Problems begin here.) The client sends a second message to the server, and almost immediately after sending it gets hit with a signal; the kernel sends an unblock-pulse to the server.

- The server thread that receives the message was about to acquire the mutex in order to check the flag, but didn't quite get there (it got preempted).

- Another server thread now gets the pulse and, because the flag is still set to a 1 from the last time, ignores the pulse.

- Now the server's first thread gets the mutex and clears the flag.

- At this point, the unblock event has been lost.

If you refine the flag to indicate more states (such as pulse received, pulse replied to, message received, message replied to), you'll still run into a synchronization race condition because there's no way for you to create an atomic binding between the flag and the receive and reply function calls. (Fundamentally, that's where the problem lies—the small timing windows after a MsgReceive() and before the flag is adjusted, and after the flag is adjusted just before the MsgReply().) The only way to get around this is to have the kernel keep track of the flag for you.