The hypervisor uses an IOMMU/SMMU Manager (smmuman) that ensures that no pass-through DMA device is able to access host-physical memory to which it has not been explicitly granted access.

DMA devices and IOMMUs/SMMUs

A non-CPU-initiated read or write is a read or write request from a Direct Memory Access (DMA) device (e.g., GPU, network card, sound card). An IOMMU (SMMU on ARM architectures) is a hardware/firmware component that provides translation and access control for non-CPU initiated reads and writes, similar to the translation and access control that second-stage page tables provide for CPU-initiated reads and writes.

Hardware limitations

Note that on some boards the IOMMU/SMMU hardware doesn't provide the smmuman service the information it requires to map individual devices and report their attempted transgressions.

For example, on some ARM boards the IOMMU doesn't provide the smmuman service the information it requires to identify individual PCI devices. Similarly, on x86 boards the VT-d hardware can't identify or control individual MMIO devices that do DMA. Additionally, some boards might not have enough session identifiers (SIDs) to be able to assign a unique SID to every hardware device, so multiple hardware devices may have to share an SID.

These sorts of limitations mean that on these boards the smmuman service may not be able to map individual devices. In a hypervisor system, the service may be able to map devices passed through to a guest to the memory allocated to that guest's VM, and report attempts by these devices to access memory outside the VM's memory, but it may not be able to report attempts by individual devices to access memory outside their allocated memory but inside the VM's allocated memory.

Responsibilities of the smmuman service

The SMMU manager (smmuman service) works with the IOMMUs or SMMUs on supported hardware platforms to:

- manage guest-physical memory to host-physical memory translations and access for non-CPU initiated reads and writes (i.e, for DMA devices).

- ensure that no pass-through device can access host-physical memory outside its mapped (permitted) host-physical memory location.

Using smmuman in a hypervisor system

In a QNX hypervisor system, the smmuman is a service that runs in the hypervisor host domain and is started at hypervisor host startup.

When it starts, the smmuman service learns which SMMUs on the board control which DMA devices. Later, qvm process instances will be able to ask smmuman service to inform the board SMMUs of the memory locations DMA devices are permitted to access.

With the information about DMA devices obtained at startup, during operation the IOMMUs/SMMUs will know to reject any attempts by DMA devices to access memory outside their permitted locations.

During operation, smmuman monitors the system IOMMUs/SMMUs. If all DMA devices are behaving as expected, smmuman does nothing except continue monitoring. However, if a DMA device attempts to access memory outside its permitted locations, the IOMMU/SMMU hardware rejects the attempt, and smmuman records the incident internally.

Clients may use the smmuman API provided in libsmmu to obtain the information and output it to a useful location, such as to the system logger (see slogger2 in the QNX Neutrino OS Utilities Reference). You can then use this information to learn more about the source of the illegal memory access attempt, and troubleshoot the problem.

smmuman and qvm

The qvm process instances in a QNX hypervisor system are smmuman clients:

- If smmuman is not running, qvm process instances refuse to include in their VMs pass-through devices it knows are DMA devices, and refuse to start (see the loc option's “d” and “n” access type attributes in the “Configuration” chapter).

- If smmuman is running and reports that it doesn't know how to handle a DMA pass-through device, the qvm process will report a problem and exit.

There is one exception to the above rules of behavior: unity-mapped guests. A guest is unity-mapped if all guest-physical memory allocated in the guest's VM is mapped directly to the host-physical memory (e.g., 0x80000000 in the guest maps to 0x80000000 in the host).

If a guest is unity-mapped, if smmuman isn't running or doesn't know how to handle a device, the qvm process instance hosting the guest will report the problem as expected, but it will also start.

Information about IOMMU/SMMU control of DMA devices

Information about which IOMMU/SMMU controls which DMA device may be available in different locations, depending on the board architecture and the specific board itself (e.g., in ACPI tables, in board-specific code). To obtain this information, smmuman queries the firmware, and accepts user-input configuration information.

By default, the user-input information will override the information obtained from the board, so that it can replace corrupt or otherwise unreliable information on the firmware. However, the user may request that smmuman keep the information obtained from the board and merge it with the user-input information.

The smmuman service in QNX guests

If you are implementing pass-through devices anywhere on a system with a QOS or QNX Neutrino OS guest that will be certified to a safety standard, you should implement the smmuman service in the hypervisor host and in your QNX Neutrino OS guest. Your guest will use the smmu vdev, which implements for a guest the IOMMU/SMMU functionality needed in a VM (see vdev smmu in the “Virtual Device Reference” chapter).

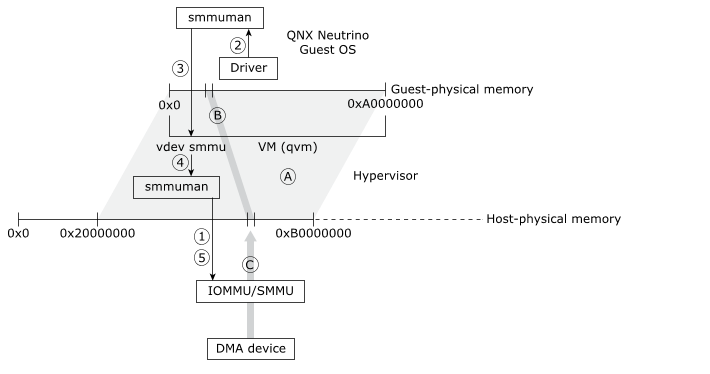

The figure below illustrates how the smmuman service is implemented in a hypervisor guest to constrain a pass-through DMA device to its assigned memory region:

Figure 1. The smmuman service running in a guest uses the

smmuman service running in the hypervisor.

Figure 1. The smmuman service running in a guest uses the

smmuman service running in the hypervisor.For simplicity, the diagram shows a single guest with a single pass-through DMA device:

-

In the hypervisor host, the VM (qvm process instance) that will host the guest uses the smmuman service to program the IOMMU/SMMU with the memory range and permissions (A) for the entire physical memory region that it will present to its guest (e.g., 0x20000000 to 0xB0000000).

This protects the hypervisor host and other guests if DMA devices passed through to the guest erroneously or maliciously attempt to access their memory. It doesn't prevent DMA devices passed through to the guest from improperly accessing memory assigned to that guest, however.

- In the guest, the driver for the pass-through DMA device uses the SMMUMAN client-side API to program the memory allocation and permissions (B) it needs into the smmuman service running in the guest.

- The guest's smmuman service programs the smmu virtual device running in its hosting VM as it would an IOMMU/SMMU in hardware: it programs into the smmu virtual device the DMA device's memory allocation and permissions.

- The smmu virtual device uses the client-side API for the smmuman service running in the hypervisor host to ask it to program the memory allocation and permissions (B) requested by the guest's smmuman service into the board's IOMMU/SMMU(s).

- The host's smmuman service programs the pass-through DMA device's memory allocation and permissions (B) into the board IOMMU/SMMU.

The DMA device's access to memory (C) is now limited to the region and permissions requested by the DMA device driver in the guest (B), and the guest OS and other components are protected from this device erroneously or maliciously accessing their memory.

Note that for the following reasons the pass-through DMA device's memory allocation and permissions (B) can't simply be allocated when the guest is started, and must be allocated after startup:

- The VM hosting the guest doesn't know what memory mappings the guest will use for its DMA devices.

- A driver in the guest may change its memory mappings.

- A guest's DMA device driver (e.g., a graphics driver) may dynamically create and destroy memory regions while the guest is running.

You should also use the smmuman service running in the hypervisor host to program the board IOMMU/SMMUs with the memory ranges and permissions for the entire physical memory regions that every VM in your system will present to their guests, so that any pass-through devices owned by these guests won't be able to access memory outside their guests' memory regions.

For more information about how to use the smmuman service in a QNX guest, see the SMMUMAN User's Guide.

Linux guests don't support the smmuman service; however, you should still use the smmuman service in the hypervisor host to program the IOMMU/SMMU with the memory range and permissions for the entire physical memory regions that the hypervisor host will present to each Linux guest.

Running smmuman in guests on ARM platforms

To run the smmuman service, a guest running in a QNX hypervisor VM on ARM platforms must load libfdt.so. Make sure you include this shared object in the guest's buildfile.